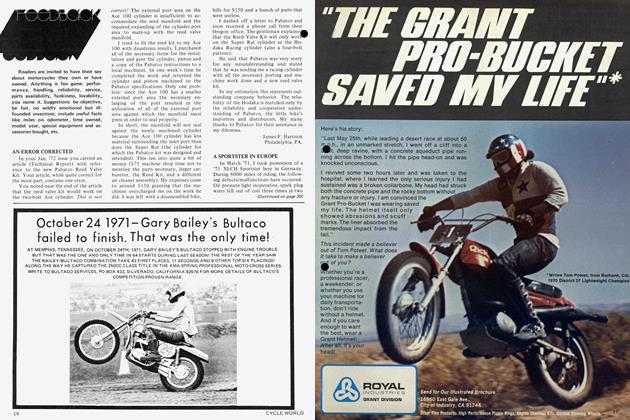

"THE HELMET MAN"

Which Is The Better Helmet? And Who Says It Is? Who You Believe May Make A Difference, As You'll Discover For Yourself In This Deeply Probing Examination Of Dr. Snively And The Snell Foundation, And The Imperfect State Of Helmet Standard Writing. / By J. G. KrolI

J. G. Kroll

PART ONE OF TWO PARTS

IT WOULD BE nice to follow up on "Crunches, Crashes & Crushbuckets" (CW, sept: 1971) by saying here are the facts...bing, bing, bing...this is true, this is false. But technological and institutional conditions do not permit so straightforward an approach.

The knowns about head protection (and they’re considerable) still seem outnumbered by the unknowns. Skimpy facts and differing viewpoints still create heated controversies. This report will try to describe some of those controversies from the perspective of helmet users, and if schlock helmet manufacturers, power-hungry bureaucrats, excitable “safety experts,” ivory tower researchers, unprincipled politicians or greedy retailers are sitting in, well, my first concern is for motorcyclists I know and like, for riders who want to know their options in head protection and who want to make their own informed decisions...wily old desert rats skeptical about the unproven claims of new products, seasoned road riders bearing scars of close escapes, enthusiastic teenagers hungry for useful information, flinteyed racers seeking the best protection money can buy, and even an outlaw opponent of safety helmets willing to put his head, so to speak, where his mouth is.

In all the helmet industry the one organization best attuned to the interests of helmet users is the Snell Memorial Foundation. The Snell Foundation was incorporated in 1957 by a group of San Francisco area sports car racers when they realized that a helmet which had failed on impact had contributed to the racing death of William H. (Pete) Snell.

Unlike the memorial foundations designed by rich, vain men to immortalize their names and escape the sting of inheritance taxes, the Snell Foundation is staffed by volunteers and is a financial pygmy compared to the ones you read about in the newspapers. Yet it can submit a convincing claim, on the tough-minded scale of sheer bodycount, to accomplishments rivaling those of foundations a thousand times its size. More Americans die of head injuries in vehicle crashes than of military combat, malnutrition, or exotic diseases.

USER-ORIENTED GROUP

The main technical brains behind the Snell Foundation are Dr. George G. Snively (West Coast), and Dr. C.O. Chichester (East), but through their medical, industrial, academic, governmental and racing contacts they can call upon a considerable pool of volunteer technical talent. Everybody involved is a helmet user, or is pretty strongly user oriented. One of the foundation’s directors is Pete Snell’s widow, Marjorie. Dr. Snively, not a motorcyclist, has raced sports cars, was once the importer for the entire Western Hemisphere of the British WSM sports-racing car, and presently maintains a stable consisting of (1) a retired Dodge Highway Patrol car, (2) a 351 Chevy-powered AustinHealey, and (3) an MG into which he’s managed to shoehorn a 289 Ford Cobra engine.

This garage would be unusual enough for a quiet, curving, tree-infested lane in the Sacramento suburbs were it not for the specially constructed two-story annex that houses a brilliantly lighted, immaculately clean laboratory in which safety helmets of all kinds are weighed, soaked, baked, frozen, pierced, dissolved, cut, pulled apart, broken and smashed in order to learn the limits of their performance. This is the Western facility in which a helmet’s right to wear the sticker “Snell 1970” is either won or lost (Chichester has a similar lab in the East).

The first public report from the Snell Foundation was Dr. Snively’s pioneering article in the July 1957 issue of Sports Cars Illustrated. Here he revealed that, according to his first crude tests, only one out of the six then available helmets provided reasonable head protection— and it had an inadequate chin strap and fastener.

Helmets, testing methods and Snell standards have come a long way since then, but one thing has remained the same. In their obviously nervous introduction to Snively’s article, in which he named names and condemned all but one, the editors of SCI observed that, “Manufacturers of the helmets concerned might not take too kindly to the publication of the data contained herein.”

Today, as 15 years ago, some manufacturers react similarly, with the added complication, however, that the industry’s growth has brought new groups into the picture, any one of which may scream when its ox is gored. Today, according to an official summary, every state with mandatory helmet performance requirements accepts Snell certification—except the state of Alabama, where a single strong-willed government official is personally opposed to Snively’s approach. Today it is not only manufacturers who “might not take too kindly” to the Snell Foundation’s pronouncements.

What is George Snively’s approach? Why does it impress some people and infuriate others? How does it compare to other possible approaches to head protection?

Why does Harry Monheit of Safetec say, “Snively is two floors above God as far as helmets are concerned,” while others in the industry scoff at the need for Snell approval of their products?

The first and probably best known part of the Snell approach is educational and centers on helmet analysis, evaluation and certification. To understand it we have to first examine the basic question: What is the “best” crash helmet?

Now the “best” crash helmet is not perfect, whatever single thing we might have in mind by “perfect.” More protection can always be gained by increasing weight and bulk, a lower price by decreasing the number of sizes, a wider variety of styles by increasing the price. We can demand more protection against cosmic rays, but perhaps only at the sacrifice of protection against man-eating sharks. Whatever benefit we seek in one area will entail some cost in another. This, of course, is the normal state of affairs for any manufactured product.

"BEST" IS NOT PERFECT

The “best” helmet is an optimum helmet, which implies that the only thing we do perfectly is to trade-off among conflicting and inconsistent objectives in order to reach the optimum compromise. We want the optimum balance between, say, weight and impact protection.

In order to do this two things are needed: a set of requirements that explain how to compare and to weigh objectives that are individually desirable but jointly incompatible; and a means for measurement by which we can compare different designs to determine which best fulfills the requirements, hence, which is optimum. In other words, we need a way of deciding what a helmet ought to do, taking all things into account, and a way of deciding what it does do. Requirements should be carefully distinguished from specifications, and the difference is easy to see. A requirement tells how a helmet is to work, a specification how a helmet is to be made. The one is a functional description, the other a physical description.

This distinction is important for a very practical reason: it is quite difficult to establish sound requirements but it is easy to write specifications. To write good requirements you have to really understand the problem in all its parts and all their interactions. To write specifications all you have to do is toss around some jargon and pick some numbers out of the air.

There is a very real danger that people in government or industry will issue standards that are not true performance requirements, but merely design specifications. Specifications are not only easier to write, but also to enforce.

Example: several states have imposed legal specifications on the area, shape, location and type of reflective material which must be on a motorcycle helmet. Any high-school drop-out could do the same. Yet, as far as I have been able to determine, nobody has the slightest idea what really is the requirement for helmet reflectors.

Even if everybody agrees that reflectors would be “nice to have” or a “good idea,” we still would have no definite requirements for exactly what they’re supposed to do, exactly how much reflection they’re supposed to provide, why this much and not more or less, what things would be affected by changing the reflectors and by how much.

Nor does the specification give us any effective way of measuring the performance of this or that reflector design and deciding which is better. The simplistic approach of “more is better” leads ultimately to absurdity or irrelevance.

Snively strongly insists that Snell standards be oriented as much as possible toward performance requirements rather than design specifications. The catch is that nobody presently knows enough about helmet requirements to be sure they’ve defined the optimum trade-off among all conflicting objectives. Besides the optimum is continually shifting as new materials are discovered, new manufacturing processes perfected, new test data revealed. In many ways a performance requirement is harder to defend—is open to wider criticism—than a simple, arbitrary specification.

PERIODIC UPGRADE

The Snell standard is periodically upgraded to accommodate advances in helmet technology, Snively’s goal being to identify that small fraction of all the models on the market which provide, relatively, the most head protection in a crash. This means the requirements are based as much on what’s available as on what’s desirable; there’s not much value to a requirement nobody can fulfill.

The current standard is Snell 1970, which replaced Snell 1968, and which will soon be replaced by tighter requirements. Snively’s criteria for upgrading the Snell requirements are (1) at the time a tougher standard is introduced at least two companies should be able to meet it, thus assuring competition and avoiding Snell’s endorsing a single outfit; and (2) the standard is due for revision whenever too many manufacturers are able to meet it.

At the moment, out of an industry with a dozen or so major manufacturers and perhaps fifty all told, nine firms have at least one Snell 1970 approved model. These are: ARAI/H-A, ASC, Bell-Toptex, Daytona, D-S, Fipro, Premier-Pacific (not related to Premier Seat & Accessory Co.), Safetec and Yoder. Please note that Snell approval is given to particular helmet models, not to brand names or to manufacturers, and by the time you read this the list may have changed. No matter.

The thing that counts is the serialized Snell label inside the helmet on your dealer’s shelf. Manufacturers often make running changes in their models. Some of these are improvements, some aren’t, so the list of Snell approved helmets is always changing. With nearly a dozen models now enjoying Snell 1970 approval we can expect an ungrading of the requirements, which will probably be identified as Snell 1972.

Since the Snell requirements are principally relative, each manufacturer on the list must engage in continuing competition against the state of the art in order to maintain his approval. The Snell approval is intended to identify a helmet as “better than most available,” which is subtly but importantly different from an absolute judgment of its performance.

AREAS OF PERFORMANCE

The Snell standard considers four areas of performance: environmental resistance, retention system, energy management and quality control.

Environmental resistance is the helmet’s ability to shrug off all the things likely to happen to it prior to an actual crash. The key to this requirement is “likely.” Snively reckons that anything that happens to a motorcycle, or to any of the usual motorcycle accessories and accouterments, is likely to happen to a motorcycle helmet. So he checks helmet impact performance after exposing the hat to 14-deg. F cold and 122-deg. F heat, to water soaking, to direct sunlight (which contains nasty ultraviolet radiation), and to common chemicals used around the body (e.g., hair dressing) and the motorcycle (e.g., gasoline, oils, racing fuels, paints).

There is widespread disagreement about Snively’s environmental resistance tests. It is pretty generally accepted that fiberglass (F/G) shells are more nearly chemically inert than polycarbonate (P/C) shells, though by no means is F/G totally inert. After all, polyester, epoxy, and polycarbonate resins are just three plastics out of millions; there’s nothing magic about polyester or epoxy. General Electric, whose “Lexan” accounts for about 80 percent of the P/C helmet shell market (the rest coming from Mobay) publishes an 18-page tabulation of the extensive tests it has run on Lexan’s resistance to over 700 common chemicals. If my arithmetic is correct, this involved 23,109.9 days of testingover 63 continuous years of exposure of Lexan samples to reagents.

The results? P/C is affected by acetone, carbon tetrachloride, Freon, gasoline, Loctite, methanol, benzene, high ozone levels, toluene, turpentine, and by some detergents, brake fluids, paint thinners, lacquer thinners, soldering fluxes, marking inks, adhesives, cements, hydraulic fluids, oils, greases, synthetic oils, and varnishes. However, P/C is not affected by 25 percent acetic acid, some cleaning solvents, nickel and copper electroplating solutions, 35 percent sulfuric acid, or a full year’s immersion in water.

THE POLYCARBONATE ISSUE

Snively argues that P/C helmets are likely to be exposed to reagents which may weaken or embrittle them, and that the risk is high enough that no P/C helmet has ever received Snell approval. A couple of dealers I checked with have stopped carrying P/C helmets, and one manufacturer will phase out his single remaining P/C helmet as soon as he can get out from under the costs of his tooling. The mold alone for P/C shells can run over $25,000. All three cited the dangers of chemical damage as a major reason for moving away from P/C. Several other manufacturers have already given up on P/C.

On the other hand, General Electric is actively searching for a coating that will not react with the P/C shell, will bond permanently, and will protect the shell against hostile chemicals.

Gary Bill Lovell, executive director of the Safety Helmet Council of America (SHCA), the trade organization whose nine active member manufacturers produce over three-quarters of all crash hats, would not release any definite figures on the market shares of P/C and F/G, but he did announce the SHCA attack on the “chemical susceptibility” problem of P/C. Every SHCAmember-made P/C helmet will soon bear a sticker warning the user to apply no chemicals to the shell. To clean or to paint the helmet, he should use only those products recommended by the manufacturer. Approved, safe products will be listed, as I understand present plans, in a booklet packed with each helmet. Furthermore, SHCA is mounting a nationwide promotion whose goal is to carry the chemical susceptibility warning, via posters, into every single motorcycle dealership and accessory store in the country.

HOW MUCH IS TOO MUCH?

Some SHCA members make only P/C hats, some only F/G, some both kinds. Indeed, several of the Snell 1970 approved helmets are made by SHCA members. So it would seem precipitate to dismiss the SHCA approach as ineffectual and self-serving. An ordinary household contains hundreds of different materials and chemicals and most of us, most of the time, manage to use them safely and within their limitations.

Of course, there are occasional accidents, injuries, even disasters. F/G is relatively inert under most conditions, but you’d better not make the mistake of mixing together the catalysts for epoxy and polyester resins. How much risk is tolerable? How much is too much? How much user experience and self-discipline can be assumed? Should the manufacturer give specific lists of what can and cannot safely be brought in contact with a P/C shell? Or could this only confuse people, and would it be better to include one of those vague, abstract, universal “Use only as directed” statements?

Not the SHCA, not George Snively, not you, not anybody can answer these questions with anything but opinions. We don’t know the requirements, and we can’t measure the degree of their fulfillment.

SNIVELY ON POLYCARBONATE

There is nothing written into the Snell 1970 standard which prohibits P/C as such, nor does Snively oppose it as such. He recognizes its advantages over F/G—in certain kinds of impacts P/C can be significantly tougher than F/G; it is better suited to mass production, P/C shells being made semi-automatically on injection molding machines, F/G shells being laid-up by hand; the more highly automated production process for P/C shells holds the potential for tighter quality control (the popular contrary belief notwithstanding); it is much less costly than F/G, one reliable estimate being a $ 15 savings at retail.

To demonstrate his freedom from systematic bias toward or against any one material, Snively is eager to point out an industrial hard hat (Snell deals with all kinds of protective hats) which he has tested and found to be one of the best available. Its shell is made from laminated cotton fabric embedded in, of all things, phenolic resin. It looks much like an electronic circuit-board, yet it works just fine in its particular application.

Furthermore, Snively foresees significant advantages for a nylon cloth/polyester resin laminate, compared to traditional F/G, especially in applications where cutting or piercing loads are severe. And he has tested helmets with titanium shells. These gave outstanding performance in impact tests, but are much too costly to be practical. Cost is always part of the equation describing an optimum helmet. Snively has worked with shells of ABS, polypropylene, polyethylene, and PVC. He’s even tested pith helmets and has found that they do a surprisingly good job of head protection.

In short, he is neither wedded to F/G nor uniquely opposed to P/C, though based on what he believes a motorcycle crash helmet ought to do, a P/C helmet can’t do that job. The dispute between Snively and the proponents of P/C shells is not basically about materials at all, but about requirements. There’s just no escaping either the difficulty or the importance of setting requirements.

HELMET RETENTION STANDARDS

The second area of Snell requirements deal with the retention system, which must hold against a steady 300-lb. pull. Dr. Snively provided an instructive example concerning this requirement which, unlike the matter of environmental resistance and shell materials, has the advantage that it does not pit him against manufacturers or anybody else in the domestic helmet industry, yet it illustrates how treacherous and divisive identifying requirements can be.

At a recent international conference on helmet standards an European delegation proposed a maximum limit on strap strength. Their reasoning was eminently plausible. Beyond, say, 100 lb. tensile strength a strap is likely to do more damage than good to the user. Should the helmet get caught, somehow, an excessively strong strap could strangle the user, break his neck, or even decapitate him. The delegation painted some vivid word pictures of these gruesome possibilities and rested its case.

Dr. Snively opposed the proposal, and not the least of his reasons must have been that a maximum 100-lb. test would, if adopted, have ma.de his own minimum 300-lb. test look decidedly inappropriate. Interestingly, neither side had any facts to support its proposal, so everybody agreed to table the motion until the next session, some months later, giving everybody time to do their homework.

STRAP STRENGTH DATA

Snively’s homework began with a search of the crash records of the CHP and the LAPD, between them the largest combined police motorcycle operation in the world. He did not find a single case of death or serious injury which could be attributed to excessive strap strength. So far so good, but only negative data. What would happen if a cyclist were thrown through the air at high speed and the edge of his helmet caught on an immovable object, like the corner of a truck bumper? Conveniently, an incident at a nearby racetrack provided a perfect experiment.

A race driver, after completing his victory lap, took a shortcut back to the pits by driving between a pair of sandfilled steel drums connected by a wire rope to block the road. Evidently he figured he could slip under the cable in his semi-reclining driving position. He didn’t quite make it. The wire rope caught under the front edge of his helmet and plucked it off his head as easily as pulling petals from a daisy. Though a bit sore about the neck and chin, he was unhurt. Now the helmet he was wearing happened to be a model Dr. Snively had tested many times. The breaking strength of its retention system was known to exceed 600 lb.!

As you’d expect, Snively’s views on strap requirements, backed up by these findings, carried the day at the next session of the international conference.

In fact, not even the original proponents of the maximum strength requirement had been able to find a single actual instance of a strap causing serious injury by being too strong.

Four general and valuable lessons can be learned from this episode. First is the general lack of sound, pertinent empirical data on the mechanics of head injury. Data are being accumulated, but very slowly, since nobody can experiment on human beings. This means that nobody today is in a position to define hard, fast, scientific, comprehensive helmet performance requirements.

The second lesson is that even impartial experts can disagree completely in their perception of requirements. Disagreements about requirements, materials, etc., are not necessarily evidence of selfish interest or evil intent. Years from now we will very probably look back on current disputes and realize that both parties, each in his own way, were wrong on the matter and that some third alternative they hadn’t even considered had finally emerged superior. Only in the comics does the existence of a dispute automatically imply that one side is good guys, the other bad guys. Only in comics do good guys wear white crash hats.

THE IRRELEVANT EXPERIENCE

The irrelevance of your own firsthand experiences to general helmet requirements is the third lesson. When Dr. Snively started to tell this incident he really grabbed my attention, for my chin still bears a nasty scar where a helmet strap actually crushed and tore the flesh. Yet his data, borne out by others in the industry, indicate that this was a freak occurrence.

Optimum requirements take all things into consideration, weighting them by the frequency of their occurrence and the severity of their result. The game is essentially statistical, and this shows the pathos and irrelevance of those letters sometimes seen in motorcycle magazines, “I wouldn’t never wear a helmet ’cause I didn’t like them. Then I went on my head and hurt myself. Now I always wear a helmet and I think there oughtabealaw to make everybody wear one.”

Your personal experiences are but a tiny statistical sample, and you may be wiped out in the process of accumulating them. Like a good poker player you have to go by the odds. The experts, like Snively, have seen so much more data than you ever will on your own that they’re much better able to estimate the true odds—though not as well as they’d like to.

The fourth lesson of the international strap dispute is that pointing out some terrible, gory thing that might happen is absolutely worthless as justification for a proposed requirement. Scare-mongering is worthless precisely because it concentrates on one possible outcome to the exclusion of all others.

Yet the very essence of defining the optimum helmet is a balance among all possible situations and outcomes. A skillful scare-monger can make an emotion-charged case for (or against) anything, even breathing. Haven’t some people been injured in bed by ceiling plaster knocked loose by an earthquake? Don’t we want to protect people against earthquakes? Doesn’t that prove husbands and wives should wear Bell Stars to bed? Wouldn’t this have the added benefit of curbing the population explosion? And wouldn’t that in turn help the ecology?

The real tragedy is that the scaremongering approach is most successful exactly where hard data are most lacking.

THE SUFFOCATION SCARE

Some British doctors, it is reported, have made headlines by opposing totalcoverage helmets on the grounds that an unconscious rider may suffocate in the helmet, or he may vomit and, in effect, drown himself. Here are the classical elements of scare-mongering.

The shocking and disgusting images of wounded riders dying in their own puke and snot. The studied concentration on a single aspect of the total-coverage hat. An argument couched in terms of possibilities rather than actualities. No comparative statistics on how often riders are benefited by total-coverage, how often harmed by it. And not least of all, a note of self-righteous certitude that, “We know what’s good for you better than you do.”

ENERGY MANAGEMENT

Facts and numbers are beginning to become available in the third area of Snell requirements, energy management. There are now several good testing facilities around the country and a lot of test helmets are being poked and prodded. The most conspicuous lack is not of raw test data, but of ways to interpret it, i.e., of better theories. All a theory really is is an efficient and compact way of organizing a mass of experimental data...though it’s easy to lose sight of this simple fact when confronting a theory that’s been highly refined over many years and is expressed in formidible mathematics.

One needed theoretical advance, Snively says, is to improve mathematical models for the dynamics of the shell, liner, restraint system, skull, brain and intercranial fluids. Such models would enable analysis and simulation of the impact transient. Researchers could then study the conditions for optimum head protection, and helmet design would become science instead of art and guesswork. I’d estimate ten to twenty years before current work in this area pans out.

Theoretical advances are also needed in correlating data on the limits of the head’s tolerance for mechanical inputs, whether single, sharp blows, or steady accelerations, or high frequency vibrations. The problem is to compare the results of tests on laboratory animals such as rabbits, monkeys, cats, and calves with the tolerances of the human head, taking into account the differences in brain, bone, tissue, fluid and total head masses, the differences in external and internal shapes, and the differences in densities, strengths, stiffnesses and viscosities of the materials.

Despite the evident complexity of the problem, outlook for its solution is bright, for dimensional analysis has scored many successes in aerodynamics, mechanics, physics and other subject areas and there exists a broad background of methods and experience on which to draw. I would not be surprised if useful results were obtained in as little as five years.

Meanwhile, Dr. Snively wishes he had the money to experiment with the head impact tolerances of black bears which, curiously, have a head configuration very close to man’s...close enough that the findings could be applied directly to human head protection without having to correct or scale the data. Such experiments are too expensive for the Snell Foundation to undertake on its own, but they are well within the scope of the federal government, which is rapidly moving in on the head protection field.

No doubt the quickest way to gain the needed information on exactly what loads the human head can and cannot tolerate would be for Chairman Mao to sacrifice 100,000 Chinee Communa to the glorification of international revolutionary proletarian science; at least this would quell the wrath of animal lovers, more vexed by the controlled deaths of as many bears. To do a serious subject justice, Snively notes that most test animals have previously undergone unrelated medical, chemical or surgical experiments and are not viable at the time they are obtained for head impact tests. A massive blow to the head, as part of a helmet experiment, is perhaps the quickest, most painless and most humane way of disposing of them.

In sum, refinement of energy management requirements requires advances in ( 1) statistical data on the dynamics of blows imparted in actual crashes (inputs), (2) consistent data on the tolerances for brain motions, accelerations, pressures and the like (outputs), and (3) mathematical models that relate the inputs to the outputs by means of helmet design parameters. Were all these factors available, performance requirements could be drawn for an optimum helmet. In the meantime, somebody is going to have to do some guessing.

SNELL vs. Z90.1

Paradoxically an independent, advisory, premium standard like Snell 1970 is easier to define under present conditions of scientific uncertainty than a consensus, compulsory, baseline standard like Z90.1 (SHCA, AAMVA, MSATA, AMA, and most state standards are merely particular institutional integuments of Z90).

George Snively is the chief architect of the Snell standard and is also the Chairman of the American National Standards Institute’s (ANSI) Z90 Committee. Consider how different his two standard-setting jobs really are. At Snell he has a small group, homogenous in its views and independent of outside “political” considerations; at ANSI the standard-setting group is not only larger and more diverse, but is specifically chartered to include the broadest representation of interests and viewpoints.

The Snell standard is purely advisory and nobody is prevented from making, selling or using helmets just because they’re not Snell approved; the Z90 standard, while theoretically voluntary, is in fact the legal basis in a majority of states for permitting sales in that state and for complying with mandatory helmet-wearing laws. Finally, the Snell standard is intended merely to assist users in identifying the top few percent of available helmets, judged principally on crash protection, which makes it essentially relative in outlook. Z90 has the tougher job of defining “adequate minimum” protection, much more an absolute measure of helmet performance.

Because of these differences Snell can adapt quickly to any new development in helmets while ANSI, by its very intent of coordinating all views, must move more slowly. If somebody came up with a hypercrystallized beeswax shell with a corn-flake liner, Snively says he would get on the phone and, within a couple of days, would have devised tests to probe the peculiarities and potential weak points of these materials. ANSI, on the other hand, could take a couple of years to react, during which time hundreds of thousands of unproven helmets might reach the market. The goal was to get identical conclusions from every lab on whether a helmet passed or failed Z90. The study went into the most minute details of the test procedures, defining everything as precisely and rigidly as possible. As a result, it is hoped that all labs will be safely within a plus or minus 10 percent margin of error on all their measurements.

DIFFERENT STANCES

When a standard contains an element of compulsion it must be established in full awareness of the responsibilities involved. Nobody prefers a government of men to a government of laws, unless he figures to be one of the men doing the governing.

Thus the ANSI standards and test procedures must be narrowly defined, scrupulously observed, and rigidly applied. To demand less would be to place everybody at the mercy and whim of faceless bureaucrats. Because the Snell standard is non-coercive it is proper for Snively to say, “I’m working for the consumer, not the manufacturer.”

Power and responsibility should go together, or so we’ve always been advised. Precisely because he has no power over manufacturers, distributors and retailers—except for persuasion and example-Snively of Snell has much lighter responsibilities toward the commercial side of the industry than does Snively of ANSI.

Being so deeply involved in both sets of standards leads to some amusing situations for Snively. Wearing his ANSI hat, for instance, Dr. Snively recently chaired a special laboratory correlation committee whose job was to increase the consistency of testing among the three labs approved by SHCA to certify compliance with Z90 (Dayton T. Brown, AETL, Underwriters) and among several other independent commercial labs.

Yet in the tests he himself conducts under the Snell standard Snively does exactly the opposite. Instead of impacting every helmet in exactly the same place, he tries very hard to find the weakest spot in the helmet, wherever it may be. Instead of applying the letter of the Snell requirements with robot-like consistency, he applies discretion and makes an overall judgment on the hat.

Though he wouldn’t say so right out, and though he emphasized that nearly all helmets either clearly pass or clearly fail the Snell tests, it is easy to get the impression that a helmet would not win Snell approval if, in each area of testing, it barely, marginally met the requirements. Naturally this exposes Snively to rebukes from irate manufacturers who feel that he is biased against their product, or company, or themselves personally. Yet it does seem that a consumer-advisory standard ought to be applied according to its spirit, just as a coercive standard ought to be applied according to its letter. They’re different forces fighting different battles. They ought to march to different drums.

"TWIGGY AND RAQUEL"

And because Z90 is trying to set a baseline while Snell is trying to skim the cream, the technical justifications for their requirements are as different as Twiggy and Raquel. It is intrinsically more difficult to define an absolute level of required head protection than to draw relative distinctions among levels of performance.

Any competent rider needs only a few laps around a motocross course to decide that a stock Husky, Maico or CZ handles better than a stock trail bike. That’s a relative choice. But what would it take to define an absolute handling requirement for all motorcycles? Nobody’s ever done it and it’s hard to see how anybody could. This has important implications for energy management requirements.

NO GUARANTEES

Recall that to define energy management requirements completely you’d have to know all about the inputs, the outputs, and the dynamic relations between the two. The facts being so skimpy, nobody can say definitely that Z90 (or any other standard) will guarantee X percent survival rate in motorcycle crashes, or that it will reduce head injuries by Y percent.

Yet these are exactly the kinds of statements we hope can be made, someday, about the baseline standard. We can’t do it now.

In contrast to this, it’s relatively easy to skim the cream. More penetration resistance is surely better than less, lower peak transient acceleration is surely better than higher. Okay, all we have to do is to tighten up the quantitative requirements on these variables until only a half dozen helmets pass.

We can’t be sure that these are exactly the six “best” in terms of protection—to assure that we’d have to know every bit as much about head mechanics as to set a baseline standard. But who cares? As long as the form of the requirements is reasonable, the six hats selected will almost surely be among the top one dozen. When Snively gives Snell approval to, say, 10 helmets he doesn’t expect anybody to believe that these are exactly the 10 best helmets for head protection. All he implies to the consumer is that these 10 are very near the top of the heap.

The relative nature of the Snell standard explains a lot of things. It explains why some manufacturers, whose hats have not won Snell approval, can be adamantly convinced their products are good, or even excellent. They may very well be. But nobody can tell for sure until much more empirical and theoretical knowledge becomes available, and until helmet requirements can be defined much more precisely.

SNELL'S SHORTCOMINGS

It also explains certain curious technical gaps in the Snell 1970 standard. For example, Z90.1 specifies not only the peak of the acceleration transient (not over 400 gs) but also places limits on its shape by stating how long the acceleration can exceed certain lower levels. Snell 1970 simply limits peak acceleration to 300 gs.

Dr. Snively has been roundly criticized for having no time requirement in the Snell standard regarding acceleration. Actually, he freely admits that a time requirement makes good sense, but he also points out that (a) there does not appear to him to be sufficient information presently available to set the time limits accurately enough to do any good; (b) there are many other variables under investigation, including rate of change of acceleration (jerk), local acceleration at certain points within the head, hydrodynamic pressure transients in the brain cavity, and rotational acceleration, none of which can be proven from extant data to be the one, true “cause” of injury; and (c) peak acceleration does provide a useful, convenient and plausible means for making relative choices among helmets.

So he concludes that, until something provably better comes along, peak acceleration is an adequate measure of helmet performance-the way he uses it—for comparative evaluations.

Again, it has been argued that the “double drop” impact test favored by Snively should be replaced by a single “giant drop.” Which is more realistic in terms of actual crash conditions? The answer awaits empirical resolution by statistical crash data.

Lacking such data, Snively falls back on scrutinizing a helmet in terms of its potential weaknesses. Good, modern liner materials are either permanently crushable, like polystyrene foam, or are slow-rebound elastomers. Hence, Snively thinks it’s reasonable to obtain at least some feel for their behavior in a secondary impact. If it’s there, he figures, test it.

TESTING PROCEDURE

The Snell energy management requirement is divided into two parts. In the penetration test a hardened steel, pointed, 6-5/8-lb. striker is dropped onto the helmet with an energy of 65 lb.-ft. The striker, sort of a giant plumb-bob, is not supposed to penetrate the helmet. This is based on the requirement that the helmet should spread out an impact in space. In the impact test the helmet, mounted on an 11-lb. aluminum model of a head, is dropped first eight, then six, feet onto a steel anvil. The peak acceleration measured inside the head form is not supposed to exceed 300 gs on either drop. This is based on the requirement that the helmet should spread out an impact in time.

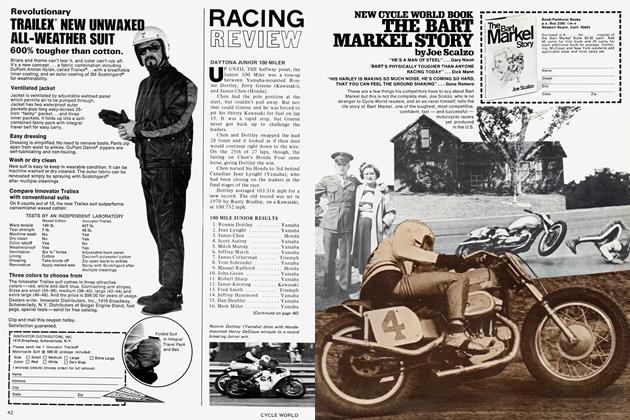

Figure 1 shows the Snell penetration test rig. The wicked-looking striker plummets down the tube from a measured height. Metal fingers at the base of the tube electrify the striker, and if it pierces through to the steel anvil beneath the helmet, the circuit is completed and the wall-mounted boxes squawk and flash; if not, the helmet passes the penetration test.

Figure 2 shows the impact test rig. Snively normally relies on the hemi spherical anvil, but others are available. The flat anvil is like the surface of a street or racetrack, the angle iron simu lates a structural member inside a heli copter, the bar simulates a race car's roilbar, the length of chain is for check ing police riot helmets, and the propel ler blade-hardened steel, razor sharp, and easily the most terrifying object in the bunch-is used on boat-racing hel mets. Add an aluminum head form and its carriage. Electrical gear translates the accelerometer readings into oscilloscope traces recorded by a Polaroid camera. The results of an actual experimental sequence are shown in Figure 3A, B and C

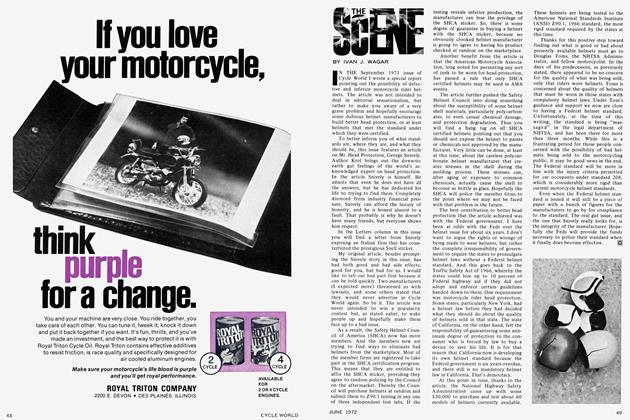

Figure 3A shows a Z90 drop, from 41/4 feet, of a prototype Japanese helmet on which Dr. Snively was doing some pliminary tests. Four milliseconds after impact the head form acceleration had reached its peak of 140 gs, well within Z90 requirements. Note the pla teau following the peak. The low fre quency oscillation of 2.5 msec period is probably structural resonance in the helmet. The high frequency ripple is accelerometer resonance.

In Figure 3B the same helmet is subjected to a Snell 1970 drop of 8 feet. Rise time is unchanged, indicating the helmet is still behaving linearly, or close to it, and peak gs are 195...which easily meets the Snell requirement. The waveform is much the same as before, but compare the peaking beginning to appear at the end of the plateau. This suggests the liner is starting to reach the limits of its damping capability.

To discover what happened to acceleration with an inadequate helmet, I asked Dr. Snively to fail this specimen deliberately by subjecting it to a maximum height drop of 1 \ xh feet onto a spot that had been weakened by previous impacts. It was necessary to go to such extreme lengths, drastically more severe than any existing standards, because the test helmet was actually very good. A low quality helmet would behave much the same way under normal Snell test requirements.

(Continued on page 114)

Continued from page 91

Figure 3C shows the dramatic results of overloading the helmet. The liner bottomed, permitting peak gs to run off the face of the scope, probably exceeding 500 gs. Notice that the peak is reached much sooner than before. Between the higher peak and the shorter rise time the rate of change of acceleration (the slope of the curve) is very much larger than before, perhaps even larger than the curve indicates, since the accelerometer itself can respond only so fast. This impact produced a decisively louder, sharper noise than the previous two drops. Most impressive.

It’s hard to watch the test without imagining that the 11-lb. chunk of aluminum is really your own head. In fact, Dr. Snively plans to film the test procedures, with sound, and to screen the film at industry meetings, dealers conventions, and trade shows. This movie, he hopes, will give pause to dealers now selling low-protection helmets and will get them to thinking about their responsibiliites to their customers.

A HELMET'S TWO TASKS

The penetration and impact tests taken together examine the two things a head protection system surely has to do: to spread out over space energy applied on a very small area, and to spread out over time energy applied nearly instantaneously.

This latter is exactly what a motorcycle suspension system does when a tire hits a rock or ledge, it transforms a large force acting over a short time into a smaller force acting over a longer time. Three independent variables are involved in these transients: the total energy of the blow, the spatial concentration of the applied energy, and the temporal concentration of the applied energy—which -amounts to the approach velocity of the impact.

A golf ball and an arrow may have the same kinetic energy, but the arrow obviously concentrates its energy into a smaller area. Similarly, an arrow and a bullet may have the same energy and same cross-sectional area, but the bullet has a much higher approach velocity.

(Cotinued on page 116)

Continued from page 114

Hence, a golf ball, an arrow, and a bullet—each with the same energy—will behave differently as they impact various materials, e.g., muscle tissue, wood, fiberglass, bone, soft mud, a tincan full of water. Impact energy alone does not tell the whole story; the distribution of this energy over space and time is equally important.

These considerations are involved in a certain curious difference between F/G and P/C shells. The Snell test applies 88 lb.-ft. of energy by dropping 11 lb. 8 ft. onto a 3.8-in. diameter hemisphere.

The same amount of energy (actually 86 lb.-ft.) can also be applied by shooting a 36 grain 22 caliber hollow-point long rifle bullet into a helmet from 100 yards away. Various tests seem to indicate that the F/G shell does a better job in the low-speed, large-area impact, while the P/C shell performs better in the high-speed, small-area impact.

THE "REALISTIC" COMBINATION

This bringsup another matter on which opinions are divided: what combinations of spatial and temporal concentration of applied energy are most representative of real crashes? If area and approach speed are held constant it seems clear that the ability to manage greater total energy defines a better helmet.

Looked at the other way around the matter is not so clear. With a fixed amount of energy should the helmet favor large impact areas, or small? Should it favor high approach velocities, or low?

Snively contends that his present tests provide a typical combination of impact areas and approach velocities. If he’s right, then the people who demonstrate the superiority of P/C over F/G by bouncing “bullets” off the former are solving the wrong problem—they should be manufacturing bullet-proof vests instead of crash helmets.

The Imperial Protector Co. has recently introduced a bullet-proof vest made of laminated sheets of P/C, and it does a bang-up job, stopping everything up to a metal-piercing 357 magnum. But there is no principle of testing that says the test conditions should be average, or normal, or typical.

On the contrary, the most critical and informative tests are usually those which deliberately stress the device far beyond its normal operating regime. The value of a diamond depends on the way it looks to an expert under a powerful magnifier, not the way it looks to an “average” person at a “normal” arms-length viewing distance.

(Continued on page 118)

Continued from page 116

In an almost exact analogy to the impact area and approach velocity of helmet tests, the crucial test of a motorcycle suspension system is not the way it behaves when hitting average-sized obstacles at average speeds but the way it reacts when hitting giant golly wobbles while moving flat out.

As is true in so many other areas, it may or may not turn out that helmet performance is best evaluated under extreme, abnormal and artificial test conditions, but a definite resolution of this problem awaits...you guessed... advances in the theoretical and empirical knowledge of head mechanics and impact tolerances.

RANDOM SAMPLING

The fourth area of Snell requirements is quality control. The Snell certification program evaluates consistency of production by random sampling of helmets from dealers’ shelves. To gain Snell approval (assuming the hats submitted for testing have passed the above hurdles) a manufacturer must sign an agreement with the Foundation authorizing it to purchase samples wherever it likes, up to a full 1 percent of total production, and agreeing to pay the costs of buying and testing these samples. Furthermore, the Foundation must be convinced that the manufacturer’s materials, processes, experience, and attitude are all consistent with the requirement for product consistency.

Whereas defining requirements is the principle difficulty in the areas of environmental resistance, retention system performance, and mechanical performance, the requirement for quality control is almost self-evident. Every helmet off the assembly line should be as much like all the others as is possible. The principle difficulty here is measurement. How do you measure the consistency of production? How do you control the manufacturing process to achieve product consistency?

One difficulty is that present testing methods are predominantly destructive: a helmet is destroyed on the Snell test rigs. Clearly, destructive testing cannot be applied to every helmet; all you can test this way is a sample, some fraction of production, and the more testing you do, the higher must the selling price of the remaining helmets become. Needed are accurate non-destructive tests fast enough and cheap enough to be used for 100% inspection right on the produc tion line.

(Continued on page 120)

Continued from page 118

THE PROFIT BIAS

Some flaws can be detected by the experienced eyes of helmet assemblers, e.g., an improperly seated strap mounting rivet (that might create local stresses), holidays or bubbles in a F/G shell, or a P/C shell that is thicker on one side, thinner on the other because of mold shift. Such judgments are subjective and qualitative, which doesn’t imply that they’re inaccurate or unreliable. Rather, the danger is that judgment will be systematically biased by pressures to maximize output and to minimize costs. Objective, quantitative, mechanical measurements are much less susceptible to such pressures.

A third difficulty with present testing is that it is predominantly “offline.” Buying helmets from retail stores means that weeks, even months, lapse between production and testing. During that time thousands of defective helmets could be manufactured and sold.

A new paint might react adversely with the P/C shell, seriously weakening it, and this condition might not be detected until months later when thousands of the faulty helmets were already on riders’ heads. This has actually happened.

The adhesive on legally required helmet reflectors might weaken P/C, again the problem not being detected for months. This, too, has happened.

A batch of P/C resin, which is quite sensitive in its raw form, might be exposed to temperatures or humidities which impair its strength. Thus a whole week’s production of as many as 10,000 helmets could be seriously defective and nobody might ever realize it. Dead customers don’t complain. While afterthe-fact field testing should never be abandoned (it is, in a sense, the ultimate test because it is closest to the conditions of actual use), it cannot do the whole job by itself. On-line testing needs to be improved.

"THE MEXICAN SKYROCKET"

These three difficulties in measuring quality control are normal for most any manufactured product, but crash helmets possess another unique difficulty that is perhaps the most serious of all, and is largely the motivation for Dr. Snively’s entire approach toward crash helmets.

Simply put, the only completely significant measurement of a helmet’i performance is what it does in a crash.

(Continued on page 144)

Continued from page 120

Like a Mexican skyrocket, there is no satisfactory way to tell in advance how it will work when you try to use it. Ballpoint pens you can give a quick and sufficient test in the store. Apples you can inspect for ripeness, bruises, and wormholes. But there is really no way that you can be sure the particular helmet you’re buying is free of flaws, manufacturing defects, or design shortcomings.

Spreading a P/C shell to remove it from the mold can, in some instances, create residual stresses causing the helmet to shatter on slight impact. Suppose only one hat out of a thousand is so affected. How can you tell whether 'you’re buying one of the 999 good ones, or the one bad one? You can’t. The manufacturer, however, can attack this problem by using more expensive molds that preclude the need for spreading the fresh shell. Eagle Sport of Kansas has started using a complex five-part mold on its latest models exactly for this reason.

The crash helmet is a unique product, Snively points out, because it is difficult for a consumer to recognize a good design when he sees one, and it is virtually impossible for him to detect a defect in a particular helmet. There are still first-time helmet shoppers who expect a nice soft sponge rubber liner and find it difficult to believe that a seemingly rigid expanded polystyrene liner actually does the better job.

Happily, most salesmen in motorcycle shops are able to steer their customers right on this. The helmet user’s cost of being wrong in his choice could be serious injury or even his life. With most other products, in contrast, the buyer can pretty well tell a good design from a poor one, he can pretty well detect a flawed unit, and his cost of mistaken judgment is not too great.

But helmets are like parachutes: at the time you’re sure you’ve got a bad one, it’s too late to do much worrying about it. Because of these unique factors Snively believes the helmet buyer is entitled to especial protection against bad merchandise.

JUDGING QUALITY CONTROL

As a result, the quality control aspect of the Snell certification program is largely judgmental. Snively wants to know the people making the helmets. He wants to evaluate their attitudes.

Do they want to make the best possible product? A sound product that comfortably meets Snell requirements? A helmet just good enough to squeak by? Are they aware of quality control problems and are they prepared to deal with them according to some workable plan? These are the sorts of questions that a purchasing agent for a large corporation would ask before committing his company to making continuing purchases from a supplier.

Unlike the purchasing agent, however, Snively does not represent a single large customer, but a diffuse public, many of whom may not even be impressed by a Snell sticker on a helmet. So every time he makes a judgmental decision about a helmet manufacturer he runs way out on a limb, and there are offended suppliers who’d love to chop off that limb. It is obvious that Snively has been burned in the past, for he is extremely cautious about making any charges against specific companies or products which he can’t back up with objective test results.

It is equally obvious that he believes some manufacturers care less about a helmet’s protective capability than its marketability and short-run profitability. The value of a Snell approval hinges on the foundation’s track record for credibility, so Snively wisely refrains from hurling public accusations he is unable to prove. This is a refreshing relief from scare-mongering sensationalism. On the other hand, Snively is not going to issue a Snell approval to a helmet unless its manufacturer has convinced him that the company is truly dedicated to building a consistently high-performance product.

UNCONSIDERED REQUIREMENTS

If these four areas of Snell requirements (environmental, retention, impact and quality control) seem reasonably straightforward to you-making allowance, of course, for the limits of present scientific knowledge—it’s only because you haven’t considered all the other requirements that could be imposed. It may seem that existing standards have touched all the bases, but this is definitely not so, as can be shown by three examples.

First, existing standards contemplate impact protection only on the top of the skull—roughly, above a line from the eyebrow through to the top of the ear. Neither Z90 nor Snell 1970 requires any protection for the base of the skull, the temples, or the jaw. Yet helmet buyers, without any urging from the approving organizations, increasingly prefer fulland total-coverage helmets for the undoubtedly greater protection they afford. Snively is considering extending the coverage requirements in future Snell standards.

Second, existing standards impose no requirements on helmet stiffness or rigidity, i.e., resistance to crushing under a steady load. In examining full-coverage helmets you will discover considerable differences in their lateral rigidity and, like many other buyers, you may choose the stiffer one, all other things being equal.

(Continued on page 146)

Continued from page 145

Present shells are as rigid as they are only to meet the penetration requirements. There is no explicit requirement for shell rigidity, even though rigidity is quite probably one of the few things you automatically take for granted about a helmet and which you look for when buying one. For the time being, Snively is satisfied with letting rigidity be set indirectly via penetration requirements.

Finally, existing standards do not countenance face protection of any kind. The rapidly growing popularity of total-coverage helmets, helmet-mounted face guards, and strap-on face protectors can be interpreted in only one way: thousands of motorcyclists are consciously choosing head protection that exceeds formalized requirements not only in degree, but in kind.

In each of these three examples we find manufacturers offering and consumers demanding products which transcend all existing formal requirements. This should thoroughly demolish the notion that helmet makers and users always follow docilely behind trailblazing standard-setters, especially government rulesmakers, who are solely responsible for leading the world onward and upward toward the great goal, safety. In some areas you seem to be well out in front of your leaders.

Through all of this runs a common thread...the distinction between advice and coercion. When CYCLE WORLD tests a motorcycle and finds its lights inadequate, it says so. This may impress some prospective buyers. Others may yawn indifferently.

But when state or federal governments find lights inadequate, they pass laws, and everybody—manufacturer and buyer alike—is forced to conform to the edict. The Snell Foundation’s approval/ disapproval program is roughly analogous to the findings in a CW road test.

If you think the past judgments of Snell or CW are sound, you’ll listen closely to what they now say. If not, you’re free to make your own choice.

(Next month: J.G. Krol continues his exploration of the Snell Foundation with emphasis on its relation to the government. Also: Should helmets be graded? How strong a role can government play in protecting the helmet buyer? How much protection will a helmet offer you 10, 20 or even 50 years from now? How much of a guarantee of protection can a risk-taking man demand? These are some of the fascinating questions he will discuss.) [O]